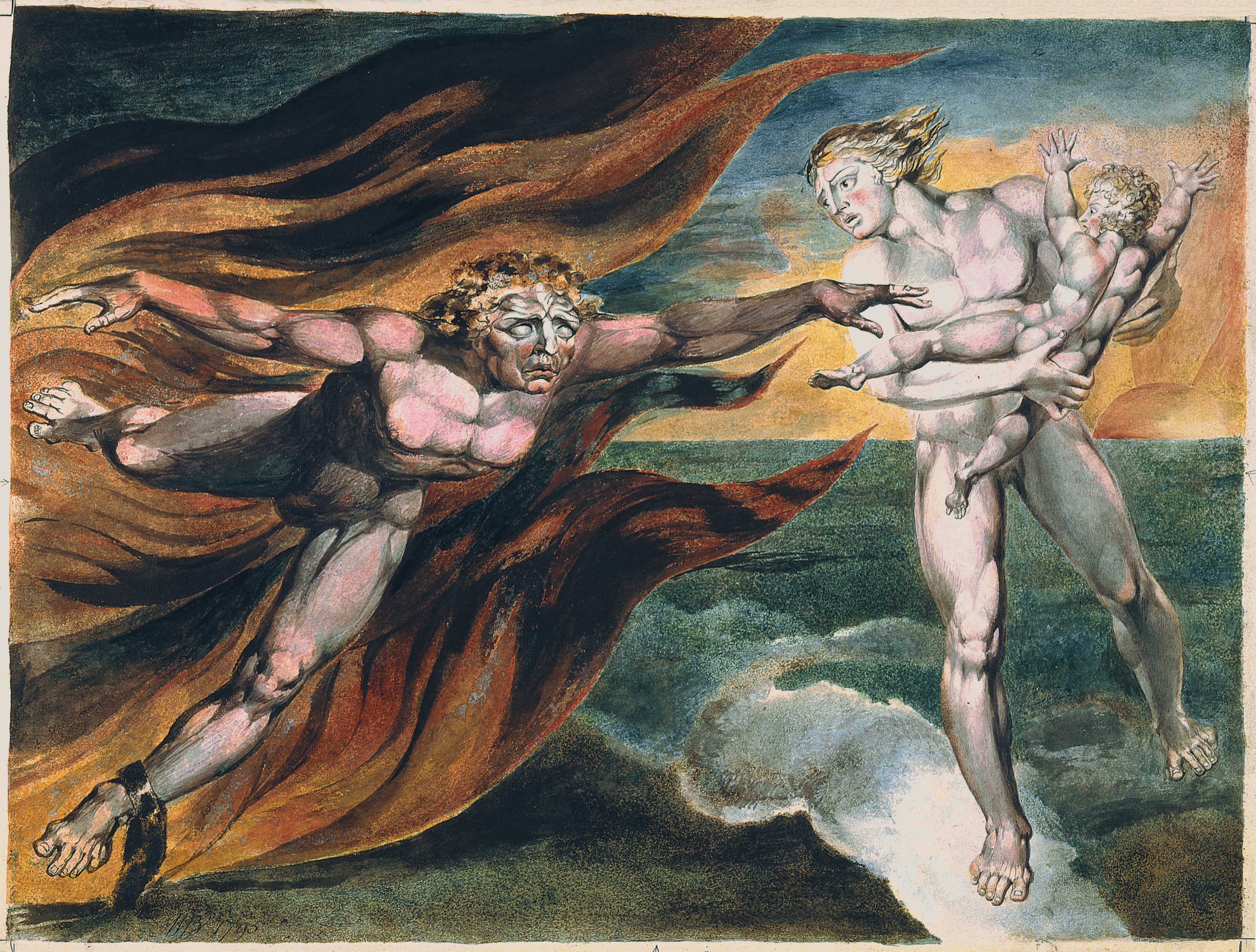

Yin and Yang. Apollo and Dionysus. Heaven and Hell. Id and Superego. Reason and Emotion. Spock and McCoy. Many intellectual and moral frameworks are structured around two, often opposing, elements. If one element gains the upper hand over the other, beware. William Blake, for example, in The Marriage of Heaven and Hell, took aim at the imbalance he saw in Christian theology. God, Heaven, and the Good were the “passive that obeys reason,” our Apollonian side. Satan, Hell and Evil were the “active springing from Energy,” wrongly suppressed by the Church, the Dionysian excess that “leads to the palace of wisdom.”

Data analysis in the social sciences has two forms, one “good” that is highly developed and has many rules that supposedly will lead us to truth, and one “bad” that lives in the shadows, has few if any rules, and is frequently, but wrongly, vilified. This imbalance is crippling the social sciences.

Angelic confirmation

Science often proceeds in roughly three parts: notice a pattern in nature, form a hypothesis about it, and then test the hypothesis by measuring nature. The challenge in testing our hypotheses is that the world is noisy, and it can be very difficult to distinguish the signal — the patterns in our data that are stable from sample to sample — from the noise — the patterns in our data that change randomly from sample to sample.

Statistics, as a discipline, has focused almost exclusively on the challenges of the third part, hypothesis testing, termed confirmatory data analysis (CDA). CDA is (and must be) the epitome of obedience: obedience to reason, to logic, to complex rules and to a meticulous, pre-specified plan that is focused on answering perhaps a single question. It is Apollonian, governed by the “good” angels of Blake’s Heaven.

Although CDA is one of science’s crown jewels, it is not designed to use data to discover new things about the world, i.e., unexpected patterns in our current sample of measurements that are likely to appear in future samples of measurements. CDA can reliably distinguish signals from noise in a sample of data only if the putative signal is specified independently of those data. If, instead, a researcher notices a pattern in her data, and then tries to use CDA on those same data to determine if the pattern is a signal or noise, she will very likely be mislead.

In a largely futile attempt to insure that hypotheses are independent of the data used to test them, statistics articles and textbooks disparage the discovery of new patterns in data by referring to it with derogatory terms such as dredging, fishing, p-hacking, snooping, cherry-picking, HARKing (Hypothesizing After the Results are Known), data torturing, and the sardonic researcher degrees of freedom.

Notice something? The first, and arguably most important step in a scientific investigation is to identify an interesting pattern in nature, yet we are taught that it is wrong use our data to look for those interesting patterns.

That, my friends, is insane.

The Devil’s exploration

John Tukey, one of the most eminent statisticians of the 20th century, recognized that his discipline had put too much emphasis on CDA and too little on exploratory data analysis (EDA), his approving term for data dredging, which he defined thus:

- It is an attitude, AND

- A flexibility, AND

- Some graph paper (or transparencies, or both).

No catalog of techniques can convey a willingness to look for what can be seen, whether or not anticipated. Yet this is at the heart of exploratory data analysis. The graph paper—and tansparencies—are there, not as a technique, but rather as a recognition that the picture-examining eye is the best finder we have of the wholly unanticipated.

Or, as he wrote in his classic text on EDA:

The greatest value of a picture is when it forces us to notice what we never expected to see.

For Tukey, data analysis was a critical tool for discovery, not only confirmation. As he put it, “Finding the question is often more important than finding the answer.” And “The most important maxim for data analysis to heed, and one which many statisticians seem to have shunned, is this: ‘Far better an approximate answer to the right question, which is often vague, than an exact answer to the wrong question, which can always be made precise.’”1

I want to draw out what I see as the radical implications of some of Tukey’s mains points for norms in the social sciences.

The world, especially the world of human cognition and behavior, is far more complex than any of us can imagine. To have any hope of understanding it, to discover the right questions, we have no choice but to collect and explore high quality data. Although running small pilot studies is tempting because they take little time and few resources, they can be worse than useless. The precision of our estimates goes as the square root of sample size. EDA on small, noisy data sets will only lead us down blind allies. Alternatively, because we social scientists get credit for confirmation, and exploration is actively discouraged, we disguise our shameful explorations as confirmations, all dressed up with stars of significance. And then those “effects” don’t replicate

The solution is obvious: we must put at least as much effort into exploration and discovery as we put into confirmation, perhaps more. We will need to collect and explore large sets of data using the best measures available. If those measures do not exist, we will need to develop them. It will take time. It will take money.

But let’s face it: discovery is the fun part of science. EDA draws on the energy, instinct, and rebelliousness of Blake’s Devil and Nietzsche’s Dionysus, that heady mix of intuition, inspiration, luck, analysis, and willingness to throw received wisdom out the door that attracted most of us to science in the first place.

The marriage of CDA and EDA

Blake, Nietzsche, and perhaps all great artists and thinkers recognize that there must be a marriage of Heaven and Hell, that neither the Dionysian nor the Apollonian should prevail over the other. Tukey understood well that “Neither exploratory nor confirmatory is sufficient alone. To try to replace either by the other is madness. We need them both.”

Madness it may be, but without institutional carrots or sticks, EDA will remain in the shadows, a pervasive yet unacknowledged practice that undermines rather than strengthens science.

One carrot would be an article type devoted to exploratory research. It might be worthwhile, though, to wield a stick.

Tukey argues that “to implement the very confirmatory paradigm properly, we need to do a lot of exploratory work.” The reason is, there is “no real alternative, in most truly confirmatory studies, to having a single main question—in which a question is specified by ALL of design, collection, monitoring, AND ANALYSIS” (caps in the original).

Answering just one question with statistical test requires decisions about, e.g., the sample population, sample size (which is based on estimated effect sizes and power), which control variables to include, choice of instruments to measure the variables, which model to fit and/or test to perform, whether and how to transform certain variables (e.g., log or square root transform), and whether to include interactions and which ones. To believe the standard textbooks, we can do all that with a single sample of data while at the same time avoiding the temptation to use any of these researcher degrees of freedom to p-hack.

Hah!

If the replication crisis has taught us anything, it is that our statistical tests are surprisingly fragile: small modifications to our procedures can have a large influence on our results. It must therefore become a basic norm in much of science that a confirmatory study – especially one reporting p-values – must preregister “ALL of design, collection, monitoring, AND ANALYSIS.” Everything. In detail.

A good confirmatory study, then, is completely specified. Running it should be like turning a crank. As Tukey said (caps in original):

Whatever those who have tried to teach it may feel, confirmatory data analysis, especially as sanctification, is a routine relatively easy to teach and, hence,

A ROUTINE EASY TO COMPUTERIZE.

The standard I’m personally aiming for (but have not quite yet achieved) is to preregister our R code.

It will be impossible to achieve this ideal without EDA — without first looking at data to evaluate and optimize all the decisions necessary to run a high quality confirmatory study. The stick I envision is that every confirmatory study would be required to have, at a minimum, two samples and two analyses. The first sample would be for EDA, the second for CDA. Every paper reporting results of a confirmatory study must also report the preceding EDA that justified each study design decision. Because the EDA would include estimates of effect sizes, each paper would contain an attempted replication of it’s main result(s).

In some cases, it will be possible to divide a single sample in two, and first perform EDA on one portion, and then CDA on the other. In other cases, it will be possible to use existing data for the EDA, and new data for the CDA. In many other cases, however, researchers will simply have to collect two (or more) samples. Requiring that every paper include an EDA on one sample and a subsequent CDA on a separate sample could cut researchers’ publication productivity in half. It could easily more than double their scientific productivity, however, their publication of results that will replicate.

Notes

Tukey was not the first to recognize the importance of exploring data, nor to clearly distinguish exploration from confirmation. De Groot made these points in 1956, for example, and even then he noted they were not new. Statistician Andrew Gelman recently raised the issue on his blog. Unlike others, however, Tukey devoted a chunk of his career to developing and promoting EDA. Much of his writing on the topic has an aphoristic flavor, which reminded me of Blake’s Proverbs of Hell. I recommend you read Tukey (1980); it’s short, with no math.↩︎